How do machines see without eyes?

An Excerpt on AI

“As nature discovered

early on, vision is one of the most powerful secret weapons of an intelligent

animal to navigate, survive, interact and change the complex world it lives in.

The same is true for intelligence systems. More than 80% of the web is data in

pixel format (photos, videos, etc.), there are more smartphones with

cameras than the number of people on earth, and every device, every machine and

every inch of our space is going to be powered by smart sensors. The only path

to build intelligent machines is to enable it with powerful visual

intelligence, just like what animals did in evolution. While many are searching

for the ‘killer app’ of vision, I’d say, vision is the ‘killer app’ of AI and

computing.”

Fei-Fei Li, interview

with TechCrunch (2017)

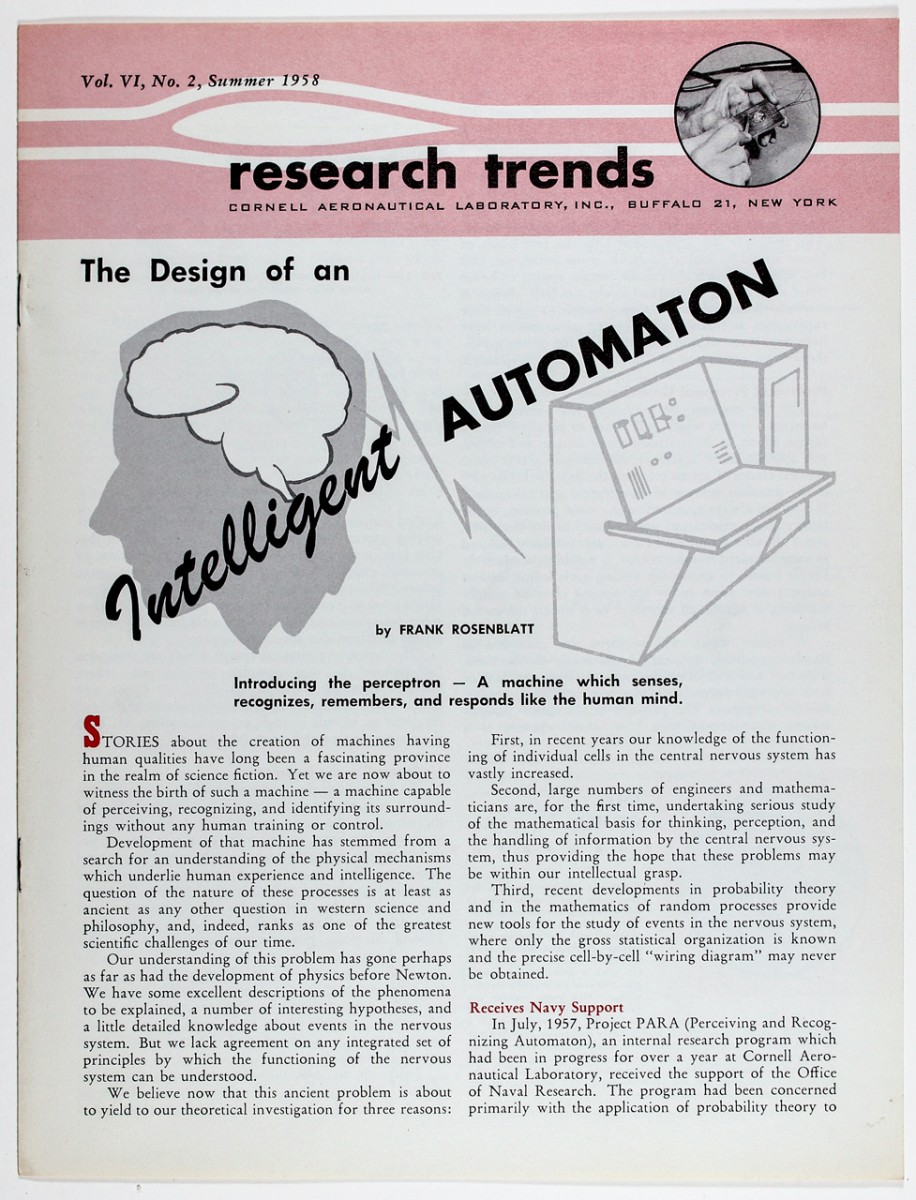

The shift to computation has enabled the path that leads to Artificial Intelligence. From the incipient days of the modern computer, figures like Alan Turing and Frank Rosenblatt conceived of it as a project to birth a form of intelligence. Today, the process of computational image production is increasingly automated with the help of AI. From subtle processing techniques to producing entirely synthetic images, the role of machine learning (ML) grows as a co-constructor of visuality and truth. ML is the umbrella term covering AI research that focuses on how deep neural networks learn from large datasets to produce a variety of desired (and undesired) outcomes through prediction. Since the early 2010s, ML has increasingly become the dominant form of AI research and engineering.

In 2016, DeepMind’s AlphaGo model famously defeated Lee Sedol, one of the best ever Go players in the world at the peak of his abilities. The wickedly inscrutable yet ultimately shrewd moves played by AlphaGo marked a cultural tipping point for the realization that AI and its bizarre forms of understanding had arrived. The system didn’t simply appear to win through its capability of sheer calculation of possible outcomes for each move, but rather through novel approaches and improbable decisions that defied the wisdom amassed by the masters of the art of Go over 2,000 years since its creation in ancient China.

Integral to the architecture of today’s most successful ML models are dozens of layers of artificial neural networks, designed to mimic how neurons fire and connect inside the human brain. As an input signal moves through the network during training, each node in these layers is forced to compress and learn (extract) features about its data, which it then transmits to other nodes. These transmissions, called weights, resemble synapses in the brain and are adjusted and optimized during training to guide the learning process towards desired outcomes. The weights store what, and how, the network has learned to create valuable relationships it can use to predict or generate new outputs similar to the data used to train it.

“Yet, what is this representation of data when it

is latent, emerging in high-dimensional space opaque to human comprehension?”

The most valuable connections made by the model occur in what are known as hidden layers, a latent space where models represent some correlational structure of their input data. Designed via principles of topology and vector math, the latent space, which informs the kinds of inferences and outputs any given model can make, is the epistemic space that comprises the so-called intelligence associated with machine learning. In other words, the latent space of representation is perhaps the equivalent of a world model for this new machine.

Yet, what is this representation of data when it is latent, emerging in high-dimensional space opaque to human comprehension? In decisions made by AI, the question of why is subsumed by what. One potential beauty of emerging AI is how it presents a rupture in the theory of language and logic that demand semantic meaning. [1] The abstractions mapped inside the layers of a neural network challenge and potentially transcend the requirements of human representation. Contained within them are patterns of thought that we can never consider, devoid of human comprehension. AI presents the potential for solving some intractable problems and understanding the universe deeper.

Anil Bawa-Cavia writes that there is no necessary bijection between language sets–different vocabularies do not necessarily correspond.[2] If indeed we are now confronted by a profoundly new form of inhuman intelligence we have birthed, how do we interface with it, and what are its limits? Can everything in the world be encoded and predicted probabilistically by billions of artificial neurons running on finite datasets such as the corpus of human language found on the Internet?[3] This is the expensive bet being made within much of the current market for AI. The push to reduce, or abstract, images into classifiable computational information called tokens is a part of this trend.

Katherine Hayles claims that remediation always entails translation, and that translation always entails interpretation.[4] In the process, something is lost, and something is gained. Another way to put this is that compression is almost always lossy. She dubs the encounter between older cultural practices associated with analog media and the strange flatness of digital media as intermediation. This concept feels useful in thinking about the back-and-forth transfer of knowledge between human and machine intelligence. Intermediation forces us to rethink fundamental questions such as “what is a text or an image?”.

Answers to this question can reveal surprising epistemic bias for a specific medium from a specific time and place that comes with its own historical limits. There is no convincing reason to prioritize one definition. In his approach to such a question, Jacques Derrida suggests the necessity of troubling (or putting “under erasure”) any one answer that tries to stake a definitive claim. There has never been a “genuine” or original concept of representation, only its trace. Or rather, all iterations are differences of the same essence, dependent on a set of contingent linguistic conditions.

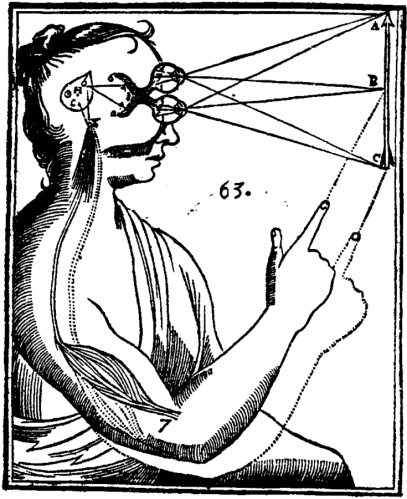

As computing power and algorithmic sophistication expand almost overnight alongside technological demand, new subfields such as computational photography have emerged to completely restructure how we humans produce, organize, and relate to images and visual representation. Although still very much material in the sense of requiring electrical signals, energy, hardware, and human labor, the network culture that upholds computational images adds new layers of abstraction on top of previous forms of media. New logics and properties emerge that, for example, unsettle our ideas of three-dimensional Euclidean space and chronological time. A machine can hold perspectives in nearly infinite ways, while digitization can collapse past(s) and present(s) into nearly instantaneous connection. Already in the late 1980s, art historian Jonathan Crary anticipated how the technical assembly of computer graphics nullified “most of the culturally established meanings of the terms observer and representation.” [5]

[1] I view artificial intelligence as both a field of research and a technology. I define it technically as a set of programmed instructions for predictive systems that can learn to evolve beyond their initial inputs and process human-level tasks (in some common ways not as well as humans, in some complex ways exponentially more efficiently than humans).

[2] Bawa-Cavia, Anil. Arity and Abstraction. Glass-Bead. http://www.glass-bead.org/research-platform/arity-abstraction/?lang=enview. 2017.

[3] I capitalize Internet, Western, and Artificial Intelligence to denote the particular historical character of these terms that are not general categories.

[4] Hayles, N K. My Mother Was a Computer: Digital Subjects and Literary Texts. Chicago: University of Chicago Press, 2005.

[5] Crary, Jonathan Techniques of the Observer. Pg. 1

Say Hi

KEEP GOING